What Everybody Ought To Know About Is Bert Always Better Than Lstm Plot Line Graph Matlab

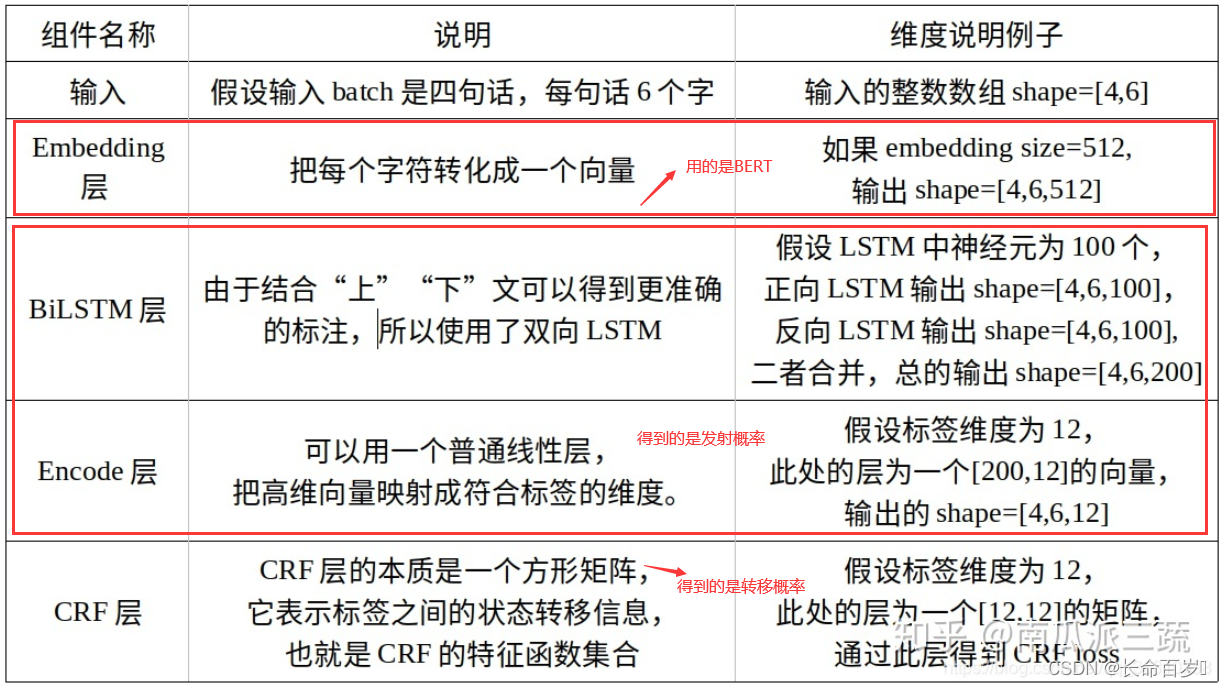

Vanilla lstm vs stacked lstm:

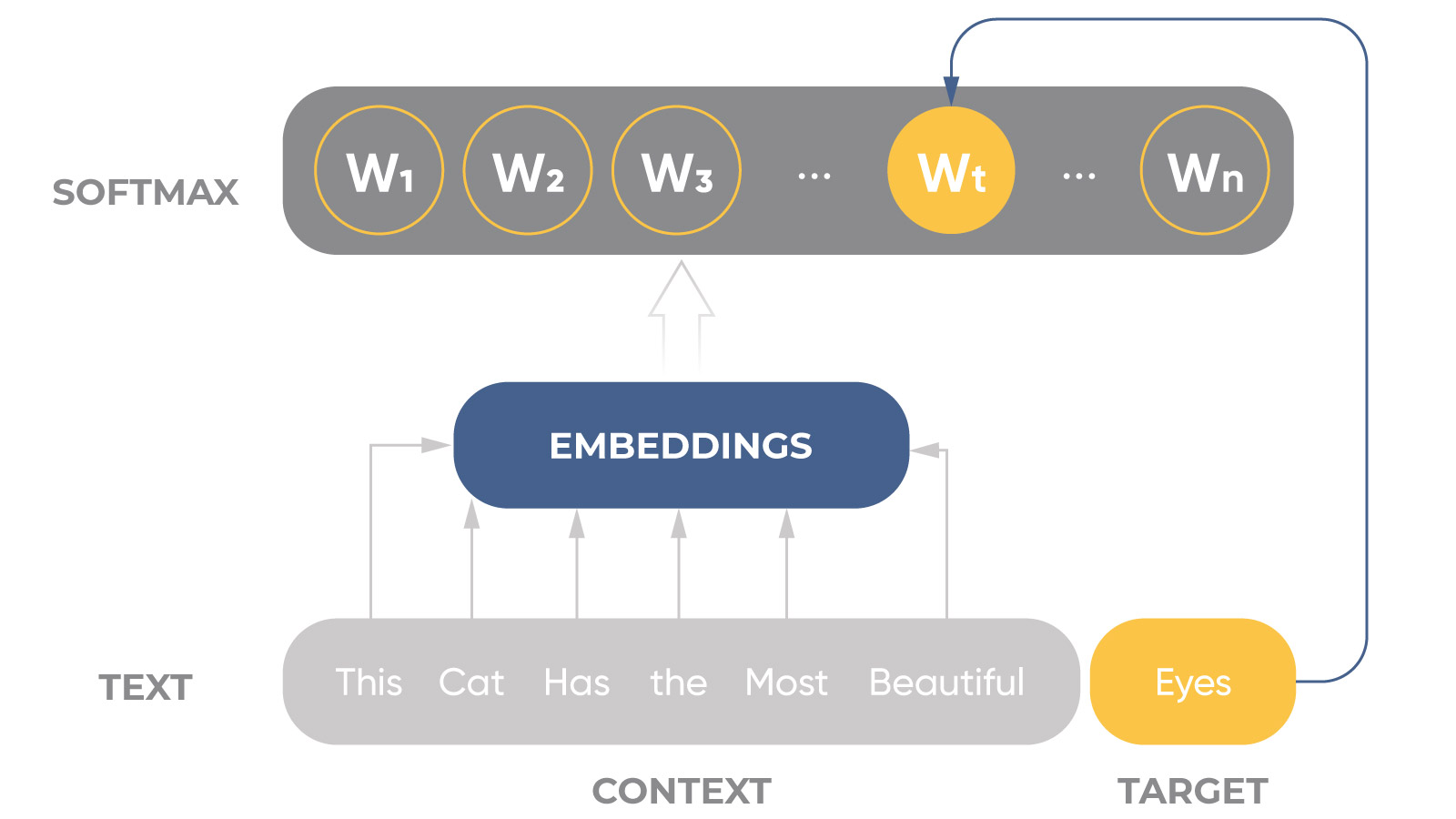

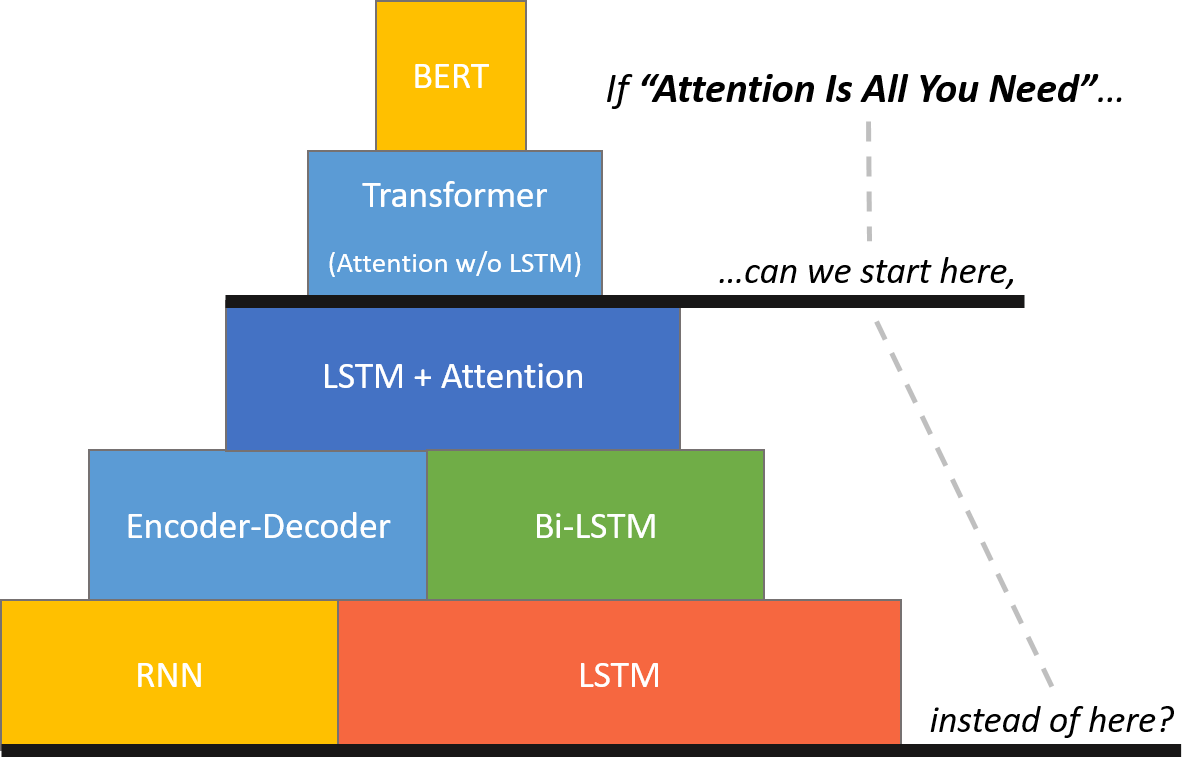

Is bert always better than lstm. To summarize, transformers are better than all the other architectures because they totally avoid recursion, by processing sentences as a whole and by. I have been asked why bert (and similar models) work so well compared to other nlp models like lstm a lot of times in the interviews. Bidirectional encoder representations from transformers ( bert) is a language model based on the transformer architecture, notable for its dramatic improvement over.

Table of contents. Bert achieved high accuracy score for several reasons: Is bert always better than lstm?

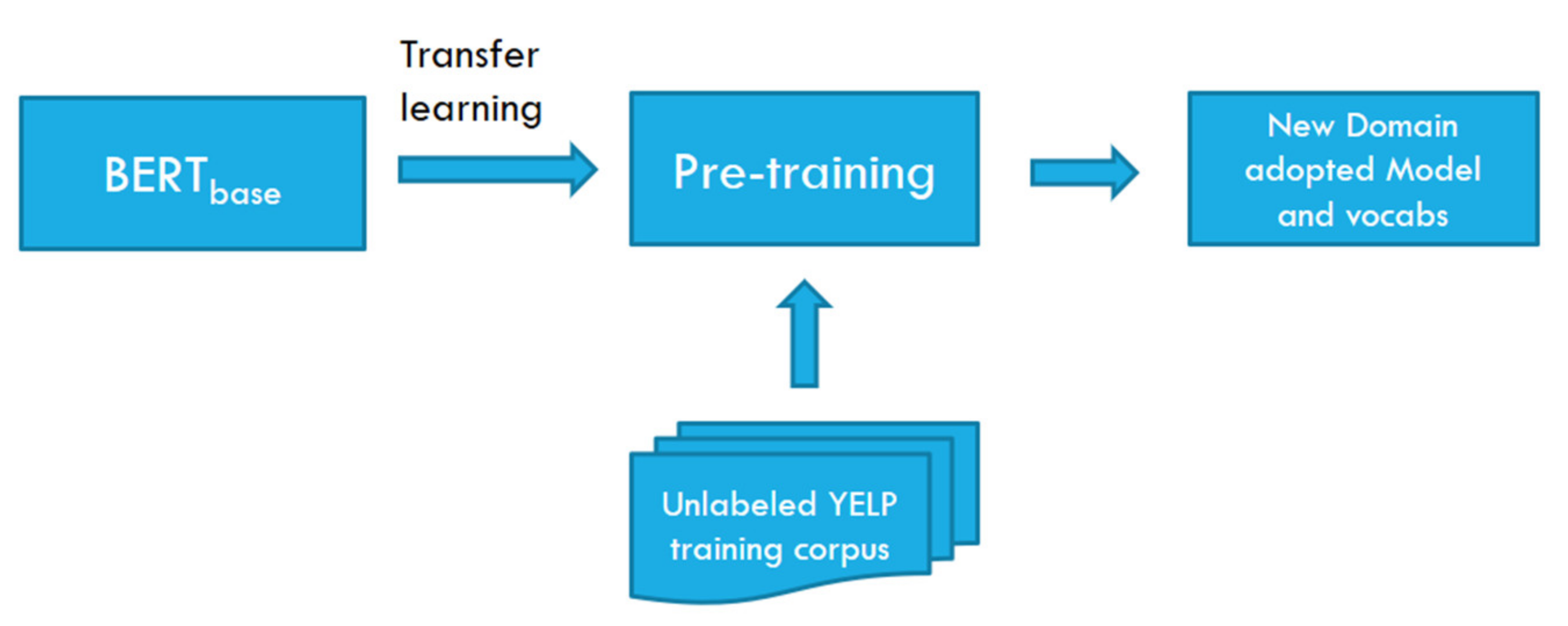

Bidirectional encoder representations from transformers (bert) is a classic example of transfer learning that was introduced by google ai team in 2018. This, as a starting point, is either lazy. You should also know that comparing bert and lstms is not a fair comparison, as with bert you are doing transfer learning, so it would profit from the.

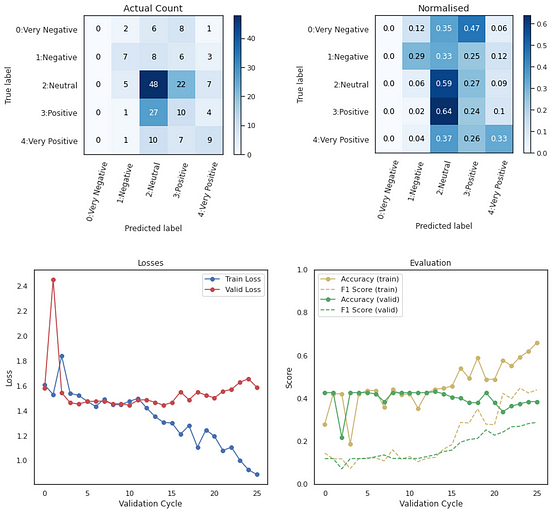

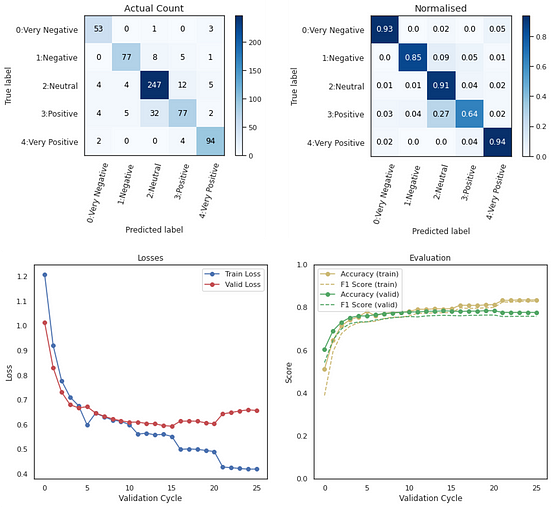

It considers both left and right contexts to learn. Why bert performs better than lstm? Based on all three models, we calculate some performance metrics such as precision, recall, auc and accuracy.

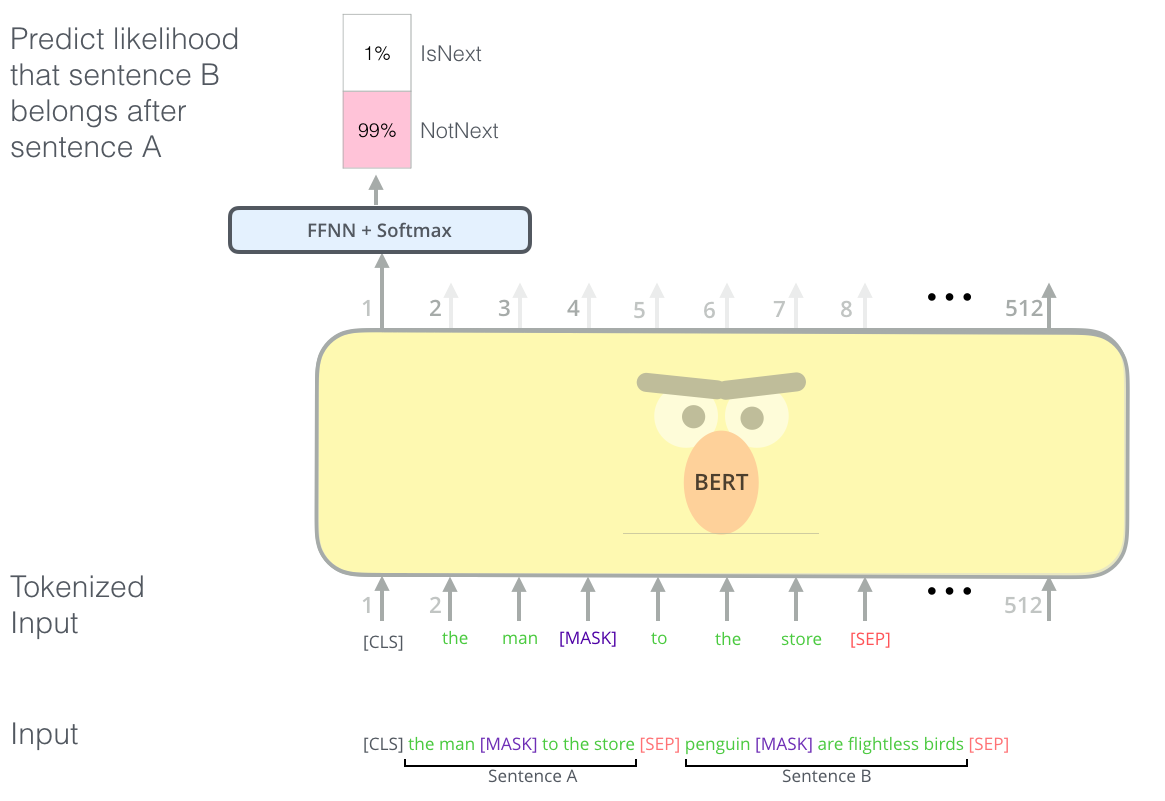

It uses transfer learning to use previous knowledge in a new setting. Optimal number of lstm layers. Bert is deeply bidirectional due to its novel masked language modeling technique.

Nlp bert mode cnn vs lstm vs bert. When compared to bert, lstm statistically significantly performed with higher accuracy in both validation data and test data. 1) bert captures the contextual meaning of words by considering the surrounding words on both.

Bidirectional encoder representations from transformers (bert) has revolutionized many nlp tasks. Lstm in its core, preserves information from inputs that has already passed through it using the hidden state. Bert is a pretraining technique.

Our experimental results show that bidirectional lstm models can achieve significantly higher results than a bert model for a small dataset and these simple. Our experimental results show that bidirectional lstm models can achieve signi cantly higher results than a bert model for a small dataset and these simple. In addition, the experimental results showed that for smaller datasets, bert overfits more than simple lstm architecture.

The key difference between a gru and an lstm is that a gru has two gates ( reset and update gates) whereas an lstm has three gates (namely input, output and forget.

![LSTMbased sentiment analysis for stock price forecast [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-408/1/fig-2-2x.jpg)

![[Study6] NLP(Natural Language Processing) RNN/LSTM에서 BERT까지 Alex’s Blog](https://sungalex.github.io/img/study6/BERT_Structure.png)